单节点运行etcd

1. 概述

这是一个序列总结文档。

1.1 VirtualBox虚拟机信息记录

学习etcd时,使用以下几个虚拟机:

| 序号 | 虚拟机 | 主机名 | IP | CPU | 内存 | 说明 |

|---|---|---|---|---|---|---|

| 1 | ansible-master | ansible | 192.168.56.120 | 2核 | 4G | Ansible控制节点 |

| 2 | ansible-node1 | etcd-node1 | 192.168.56.121 | 2核 | 2G | Ansible工作节点1 |

| 3 | ansible-node2 | etcd-node2 | 192.168.56.122 | 2核 | 2G | Ansible工作节点2 |

| 4 | ansible-node3 | etcd-node3 | 192.168.56.123 | 2核 | 2G | Ansible工作节点3 |

后面会编写使用ansible部署etcd集群的剧本。

操作系统说明:

sh

[root@etcd-node1 ~]# cat /etc/centos-release

CentOS Linux release 7.9.2009 (Core)

[root@etcd-node1 ~]# hostname -I

192.168.56.121 10.0.3.15

[root@etcd-node1 ~]#2. 单服务器部署etcd集群

参考官方文档How to Set Up a Demo etcd Cluster

- ectd端口中是 2379 用于客户端连接,提供HTTP API服务,供客户端交互。而 2380 用于伙伴通讯。

为了能在单服务器上部署etcd集群,且运行的端口和路径不相互冲突,我们先规划一下:

| 序号 | 节点名称 | 节点运行目录 | IP | 通讯端口 | 客户端端口 |

|---|---|---|---|---|---|

| 1 | node1 | /srv/etcd/node1 | 192.168.56.121 | 23801 | 23791 |

| 2 | node2 | /srv/etcd/node2 | 192.168.56.121 | 23802 | 23792 |

| 3 | node3 | /srv/etcd/node3 | 192.168.56.121 | 23803 | 23793 |

然后编写三个脚本:

sh

# 创建三个启动目录

[root@etcd-node1 ~]# cd /srv/etcd

[root@etcd-node1 etcd]# mkdir -p node1 node2 node3查看第1个启动脚本:

sh

[root@etcd-node1 etcd]# cat node1/start.sh

TOKEN=token-01

CLUSTER_STATE=new

NAME_1=node1

NAME_2=node2

NAME_3=node3

HOST_1=192.168.56.121

HOST_2=192.168.56.121

HOST_3=192.168.56.121

PEER_PORT_1=23801

PEER_PORT_2=23802

PEER_PORT_3=23803

API_PORT_1=23791

API_PORT_2=23792

API_PORT_3=23793

START_PATH_1=/srv/etcd/node1

START_PATH_2=/srv/etcd/node2

START_PATH_3=/srv/etcd/node3

CLUSTER=${NAME_1}=http://${HOST_1}:${PEER_PORT_1},${NAME_2}=http://${HOST_2}:${PEER_PORT_2},${NAME_3}=http://${HOST_3}:${PEER_PORT_3}

echo "CLUSTER:${CLUSTER}"

# 节点1

THIS_NAME=${NAME_1}

THIS_IP=${HOST_1}

cd ${START_PATH_1}

nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \

--initial-advertise-peer-urls http://${THIS_IP}:${PEER_PORT_1} --listen-peer-urls http://${THIS_IP}:${PEER_PORT_1} \

--advertise-client-urls http://${THIS_IP}:${API_PORT_1} --listen-client-urls http://${THIS_IP}:${API_PORT_1} \

--initial-cluster ${CLUSTER} \

--initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} &

[root@etcd-node1 etcd]#查看第2个启动脚本:

sh

[root@etcd-node1 etcd]# cat node2/start.sh

TOKEN=token-01

CLUSTER_STATE=new

NAME_1=node1

NAME_2=node2

NAME_3=node3

HOST_1=192.168.56.121

HOST_2=192.168.56.121

HOST_3=192.168.56.121

PEER_PORT_1=23801

PEER_PORT_2=23802

PEER_PORT_3=23803

API_PORT_1=23791

API_PORT_2=23792

API_PORT_3=23793

START_PATH_1=/srv/etcd/node1

START_PATH_2=/srv/etcd/node2

START_PATH_3=/srv/etcd/node3

CLUSTER=${NAME_1}=http://${HOST_1}:${PEER_PORT_1},${NAME_2}=http://${HOST_2}:${PEER_PORT_2},${NAME_3}=http://${HOST_3}:${PEER_PORT_3}

echo "CLUSTER:${CLUSTER}"

# 节点2

THIS_NAME=${NAME_2}

THIS_IP=${HOST_2}

cd ${START_PATH_2}

nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \

--initial-advertise-peer-urls http://${THIS_IP}:${PEER_PORT_2} --listen-peer-urls http://${THIS_IP}:${PEER_PORT_2} \

--advertise-client-urls http://${THIS_IP}:${API_PORT_2} --listen-client-urls http://${THIS_IP}:${API_PORT_2} \

--initial-cluster ${CLUSTER} \

--initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} &

[root@etcd-node1 etcd]#查看第3个启动脚本:

sh

[root@etcd-node1 etcd]# cat node3/start.sh

TOKEN=token-01

CLUSTER_STATE=new

NAME_1=node1

NAME_2=node2

NAME_3=node3

HOST_1=192.168.56.121

HOST_2=192.168.56.121

HOST_3=192.168.56.121

PEER_PORT_1=23801

PEER_PORT_2=23802

PEER_PORT_3=23803

API_PORT_1=23791

API_PORT_2=23792

API_PORT_3=23793

START_PATH_1=/srv/etcd/node1

START_PATH_2=/srv/etcd/node2

START_PATH_3=/srv/etcd/node3

CLUSTER=${NAME_1}=http://${HOST_1}:${PEER_PORT_1},${NAME_2}=http://${HOST_2}:${PEER_PORT_2},${NAME_3}=http://${HOST_3}:${PEER_PORT_3}

echo "CLUSTER:${CLUSTER}"

# 节点3

THIS_NAME=${NAME_3}

THIS_IP=${HOST_3}

cd ${START_PATH_3}

nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \

--initial-advertise-peer-urls http://${THIS_IP}:${PEER_PORT_3} --listen-peer-urls http://${THIS_IP}:${PEER_PORT_3} \

--advertise-client-urls http://${THIS_IP}:${API_PORT_3} --listen-client-urls http://${THIS_IP}:${API_PORT_3} \

--initial-cluster ${CLUSTER} \

--initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} &

[root@etcd-node1 etcd]#分别启动3个脚本:

sh

[root@etcd-node1 ~]# cd /srv/etcd/node1 && ./start.sh && cd -

CLUSTER:node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803

/root

[root@etcd-node1 ~]# nohup: appending output to ‘nohup.out’

[root@etcd-node1 ~]# cd /srv/etcd/node2 && ./start.sh && cd -

CLUSTER:node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803

/root

[root@etcd-node1 ~]# nohup: appending output to ‘nohup.out’

[root@etcd-node1 ~]# cd /srv/etcd/node3 && ./start.sh && cd -

CLUSTER:node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803

/root

[root@etcd-node1 ~]# nohup: appending output to ‘nohup.out’查看etcd进程和端口监听:

sh

[root@etcd-node1 ~]# ps -ef|grep etcd

root 1584 1 0 23:24 pts/0 00:00:00 etcd --data-dir=data.etcd --name node1 --initial-advertise-peer-urls http://192.168.56.121:23801 --listen-peer-urls http://192.168.56.121:23801 --advertise-client-urls http://192.168.56.121:23791 --listen-client-urls http://192.168.56.121:23791 --initial-cluster node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803 --initial-cluster-state new --initial-cluster-token token-01

root 1590 1 0 23:25 pts/0 00:00:00 etcd --data-dir=data.etcd --name node2 --initial-advertise-peer-urls http://192.168.56.121:23802 --listen-peer-urls http://192.168.56.121:23802 --advertise-client-urls http://192.168.56.121:23792 --listen-client-urls http://192.168.56.121:23792 --initial-cluster node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803 --initial-cluster-state new --initial-cluster-token token-01

root 1602 1 0 23:25 pts/0 00:00:00 etcd --data-dir=data.etcd --name node3 --initial-advertise-peer-urls http://192.168.56.121:23803 --listen-peer-urls http://192.168.56.121:23803 --advertise-client-urls http://192.168.56.121:23793 --listen-client-urls http://192.168.56.121:23793 --initial-cluster node1=http://192.168.56.121:23801,node2=http://192.168.56.121:23802,node3=http://192.168.56.121:23803 --initial-cluster-state new --initial-cluster-token token-01

root 1610 1395 0 23:25 pts/0 00:00:00 grep --color=always etcd

[root@etcd-node1 ~]# netstat -tunlp|grep etcd

tcp 0 0 192.168.56.121:23791 0.0.0.0:* LISTEN 1584/etcd

tcp 0 0 192.168.56.121:23792 0.0.0.0:* LISTEN 1590/etcd

tcp 0 0 192.168.56.121:23793 0.0.0.0:* LISTEN 1602/etcd

tcp 0 0 192.168.56.121:23801 0.0.0.0:* LISTEN 1584/etcd

tcp 0 0 192.168.56.121:23802 0.0.0.0:* LISTEN 1590/etcd

tcp 0 0 192.168.56.121:23803 0.0.0.0:* LISTEN 1602/etcd

[root@etcd-node1 ~]#同时,也可以看到,在启动目录下也生成了些数据文件:

sh

[root@etcd-node1 ~]# find /srv/etcd/node1

/srv/etcd/node1

/srv/etcd/node1/start.sh

/srv/etcd/node1/nohup.out

/srv/etcd/node1/data.etcd

/srv/etcd/node1/data.etcd/member

/srv/etcd/node1/data.etcd/member/snap

/srv/etcd/node1/data.etcd/member/snap/db

/srv/etcd/node1/data.etcd/member/wal

/srv/etcd/node1/data.etcd/member/wal/0000000000000000-0000000000000000.wal

/srv/etcd/node1/data.etcd/member/wal/0.tmp

[root@etcd-node1 ~]# find /srv/etcd/node2

/srv/etcd/node2

/srv/etcd/node2/start.sh

/srv/etcd/node2/nohup.out

/srv/etcd/node2/data.etcd

/srv/etcd/node2/data.etcd/member

/srv/etcd/node2/data.etcd/member/snap

/srv/etcd/node2/data.etcd/member/snap/db

/srv/etcd/node2/data.etcd/member/wal

/srv/etcd/node2/data.etcd/member/wal/0000000000000000-0000000000000000.wal

/srv/etcd/node2/data.etcd/member/wal/0.tmp

[root@etcd-node1 ~]# find /srv/etcd/node3

/srv/etcd/node3

/srv/etcd/node3/start.sh

/srv/etcd/node3/nohup.out

/srv/etcd/node3/data.etcd

/srv/etcd/node3/data.etcd/member

/srv/etcd/node3/data.etcd/member/snap

/srv/etcd/node3/data.etcd/member/snap/db

/srv/etcd/node3/data.etcd/member/wal

/srv/etcd/node3/data.etcd/member/wal/0000000000000000-0000000000000000.wal

/srv/etcd/node3/data.etcd/member/wal/0.tmp

[root@etcd-node1 ~]#3. 查看集群信息

将以下命令放到~/.bashrc配置文件中,方便后续使用:

sh

export ETCDCTL_API=3

export HOST_1=192.168.56.121

export HOST_2=192.168.56.121

export HOST_3=192.168.56.121

export API_PORT_1=23791

export API_PORT_2=23792

export API_PORT_3=23793

export ENDPOINTS=${HOST_1}:${API_PORT_1},${HOST_2}:${API_PORT_2},${HOST_3}:${API_PORT_3}然后使用source ~/.bashrc使配置生效。

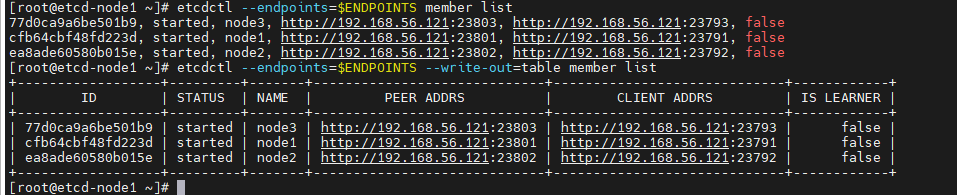

生效后查看集群成员列表信息:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS member list

77d0ca9a6be501b9, started, node3, http://192.168.56.121:23803, http://192.168.56.121:23793, false

cfb64cbf48fd223d, started, node1, http://192.168.56.121:23801, http://192.168.56.121:23791, false

ea8ade60580b015e, started, node2, http://192.168.56.121:23802, http://192.168.56.121:23792, false

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS --write-out=table member list

+------------------+---------+-------+-----------------------------+-----------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------+-----------------------------+-----------------------------+------------+

| 77d0ca9a6be501b9 | started | node3 | http://192.168.56.121:23803 | http://192.168.56.121:23793 | false |

| cfb64cbf48fd223d | started | node1 | http://192.168.56.121:23801 | http://192.168.56.121:23791 | false |

| ea8ade60580b015e | started | node2 | http://192.168.56.121:23802 | http://192.168.56.121:23792 | false |

+------------------+---------+-------+-----------------------------+-----------------------------+------------+

[root@etcd-node1 ~]#

此时可以看到,我们在单服务器上面部署的三个etcd。

注意

最后一列是IS LEARNER不是Is Leader,不要搞错了

查看集群endpoints状态:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS endpoint status

192.168.56.121:23791, cfb64cbf48fd223d, 3.5.18, 20 kB, false, false, 3, 15, 15,

192.168.56.121:23792, ea8ade60580b015e, 3.5.18, 20 kB, true, false, 3, 15, 15,

192.168.56.121:23793, 77d0ca9a6be501b9, 3.5.18, 20 kB, false, false, 3, 15, 15,

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint status

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.56.121:23791 | cfb64cbf48fd223d | 3.5.18 | 20 kB | false | false | 3 | 15 | 15 | |

| 192.168.56.121:23792 | ea8ade60580b015e | 3.5.18 | 20 kB | true | false | 3 | 15 | 15 | |

| 192.168.56.121:23793 | 77d0ca9a6be501b9 | 3.5.18 | 20 kB | false | false | 3 | 15 | 15 | |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@etcd-node1 ~]#查看集群健康状态:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint health

+----------------------+--------+------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+----------------------+--------+------------+-------+

| 192.168.56.121:23792 | true | 1.564431ms | |

| 192.168.56.121:23793 | true | 1.461979ms | |

| 192.168.56.121:23791 | true | 1.350891ms | |

+----------------------+--------+------------+-------+

[root@etcd-node1 ~]#设置和获取键值:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS put greeting "Hello, etcd"

OK

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS get greeting

greeting

Hello, etcd

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS put name "etcd"

OK

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS get name

name

etcd可以看到,可以正常获取键值。

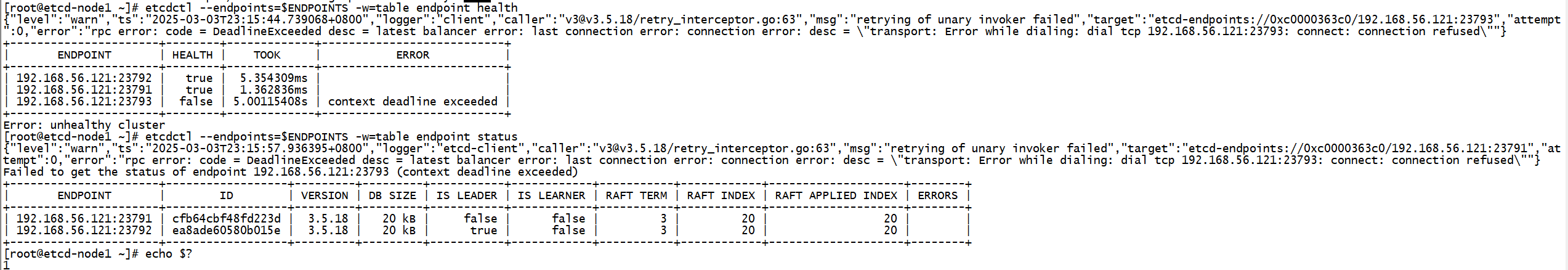

当尝试将节点3进程kill掉后,再查看集群端点健康信息和状态信息:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint health

{"level":"warn","ts":"2025-03-03T23:15:44.739068+0800","logger":"client","caller":"v3@v3.5.18/retry_interceptor.go:63","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc0000363c0/192.168.56.121:23793","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = latest balancer error: last connection error: connection error: desc = \"transport: Error while dialing: dial tcp 192.168.56.121:23793: connect: connection refused\""}

+----------------------+--------+-------------+---------------------------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+----------------------+--------+-------------+---------------------------+

| 192.168.56.121:23792 | true | 5.354309ms | |

| 192.168.56.121:23791 | true | 1.362836ms | |

| 192.168.56.121:23793 | false | 5.00115408s | context deadline exceeded |

+----------------------+--------+-------------+---------------------------+

Error: unhealthy cluster

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint status

{"level":"warn","ts":"2025-03-03T23:15:57.936395+0800","logger":"etcd-client","caller":"v3@v3.5.18/retry_interceptor.go:63","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc0000363c0/192.168.56.121:23791","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = latest balancer error: last connection error: connection error: desc = \"transport: Error while dialing: dial tcp 192.168.56.121:23793: connect: connection refused\""}

Failed to get the status of endpoint 192.168.56.121:23793 (context deadline exceeded)

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.56.121:23791 | cfb64cbf48fd223d | 3.5.18 | 20 kB | false | false | 3 | 20 | 20 | |

| 192.168.56.121:23792 | ea8ade60580b015e | 3.5.18 | 20 kB | true | false | 3 | 20 | 20 | |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@etcd-node1 ~]#

此时可以看到,检查到第三个节点有异常了!

再将节点3服务启动,集群状态又服务正常:

sh

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint health

+----------------------+--------+------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+----------------------+--------+------------+-------+

| 192.168.56.121:23792 | true | 1.413995ms | |

| 192.168.56.121:23793 | true | 1.560991ms | |

| 192.168.56.121:23791 | true | 3.256187ms | |

+----------------------+--------+------------+-------+

[root@etcd-node1 ~]# etcdctl --endpoints=$ENDPOINTS -w=table endpoint status

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.56.121:23791 | cfb64cbf48fd223d | 3.5.18 | 20 kB | false | false | 3 | 27 | 27 | |

| 192.168.56.121:23792 | ea8ade60580b015e | 3.5.18 | 20 kB | true | false | 3 | 27 | 27 | |

| 192.168.56.121:23793 | 77d0ca9a6be501b9 | 3.5.18 | 20 kB | false | false | 3 | 27 | 27 | |

+----------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@etcd-node1 ~]#