etcd配置文件

1. 概述

这是一个序列总结文档。

- 第1节 安装etcd 中,在CentOS7上面安装etcd。

- 第2节 单节点运行etcd 在单节点上面运行etcd。

- 第3节 三节点部署etcd集群 在三节点上面部署etcd集群,并为etcd配置了一些快捷命令。

- 第4节 etcd TLS集群部署 在三节点上面部署etcd集群,并开启TLS协议的加密通讯。

- 第5节 etcd角色权限控制 在三节点上面部署etcd TLS集群的基础上,开启角色控制。详细可参考 https://etcd.io/docs/v3.5/demo/ 。

- 第6节 etcd证书过期处理,在etcd证书过期后,etcd相关命令行都操作不了,讲解如何处理这个问题。

- 第7节 etcd配置文件, 通过etcd配置文件来配置相关参数,然后启动etcd服务。

1.1 VirtualBox虚拟机信息记录

学习etcd时,使用以下几个虚拟机:

| 序号 | 虚拟机 | 主机名 | IP | CPU | 内存 | 说明 |

|---|---|---|---|---|---|---|

| 1 | ansible-master | ansible | 192.168.56.120 | 2核 | 4G | Ansible控制节点 |

| 2 | ansible-node1 | etcd-node1 | 192.168.56.121 | 2核 | 2G | Ansible工作节点1 |

| 3 | ansible-node2 | etcd-node2 | 192.168.56.122 | 2核 | 2G | Ansible工作节点2 |

| 4 | ansible-node3 | etcd-node3 | 192.168.56.123 | 2核 | 2G | Ansible工作节点3 |

后面会编写使用ansible部署etcd集群的剧本。

操作系统说明:

[root@etcd-node1 ~]# cat /etc/centos-release

CentOS Linux release 7.9.2009 (Core)

[root@etcd-node1 ~]# hostname -I

192.168.56.121 10.0.3.15

[root@etcd-node1 ~]#2. etcd配置文件

之前启动etcd节点都是通过在命令行增加相关参数,详细可参考 第4节 etcd TLS集群部署 在三节点上面部署etcd集群。

etcd官方文档 https://etcd.io/docs/v3.5/op-guide/configuration/ 中也提到etcd支持通过配置文件:

Configuration file

An etcd configuration file consists of a YAML map whose keys are command-line flag names and values are the flag values. In order to use this file, specify the file path as a value to the

--config-fileflag orETCD_CONFIG_FILEenvironment variable.For an example, see the etcd.conf.yml sample.

2.1 etcd配置示例

etcd.conf.yml配置文件示例:

# This is the configuration file for the etcd server.

# Human-readable name for this member.

name: 'default'

# Path to the data directory.

data-dir:

# Path to the dedicated wal directory.

wal-dir:

# Number of committed transactions to trigger a snapshot to disk.

snapshot-count: 10000

# Time (in milliseconds) of a heartbeat interval.

heartbeat-interval: 100

# Time (in milliseconds) for an election to timeout.

election-timeout: 1000

# Raise alarms when backend size exceeds the given quota. 0 means use the

# default quota.

quota-backend-bytes: 0

# List of comma separated URLs to listen on for peer traffic.

listen-peer-urls: http://localhost:2380

# List of comma separated URLs to listen on for client traffic.

listen-client-urls: http://localhost:2379

# Maximum number of snapshot files to retain (0 is unlimited).

max-snapshots: 5

# Maximum number of wal files to retain (0 is unlimited).

max-wals: 5

# Comma-separated white list of origins for CORS (cross-origin resource sharing).

cors:

# List of this member's peer URLs to advertise to the rest of the cluster.

# The URLs needed to be a comma-separated list.

initial-advertise-peer-urls: http://localhost:2380

# List of this member's client URLs to advertise to the public.

# The URLs needed to be a comma-separated list.

advertise-client-urls: http://localhost:2379

# Discovery URL used to bootstrap the cluster.

discovery:

# Valid values include 'exit', 'proxy'

discovery-fallback: 'proxy'

# HTTP proxy to use for traffic to discovery service.

discovery-proxy:

# DNS domain used to bootstrap initial cluster.

discovery-srv:

# Comma separated string of initial cluster configuration for bootstrapping.

# Example: initial-cluster: "infra0=http://10.0.1.10:2380,infra1=http://10.0.1.11:2380,infra2=http://10.0.1.12:2380"

initial-cluster:

# Initial cluster token for the etcd cluster during bootstrap.

initial-cluster-token: 'etcd-cluster'

# Initial cluster state ('new' or 'existing').

initial-cluster-state: 'new'

# Reject reconfiguration requests that would cause quorum loss.

strict-reconfig-check: false

# Enable runtime profiling data via HTTP server

enable-pprof: true

# Valid values include 'on', 'readonly', 'off'

proxy: 'off'

# Time (in milliseconds) an endpoint will be held in a failed state.

proxy-failure-wait: 5000

# Time (in milliseconds) of the endpoints refresh interval.

proxy-refresh-interval: 30000

# Time (in milliseconds) for a dial to timeout.

proxy-dial-timeout: 1000

# Time (in milliseconds) for a write to timeout.

proxy-write-timeout: 5000

# Time (in milliseconds) for a read to timeout.

proxy-read-timeout: 0

client-transport-security:

# Path to the client server TLS cert file.

cert-file:

# Path to the client server TLS key file.

key-file:

# Enable client cert authentication.

client-cert-auth: false

# Path to the client server TLS trusted CA cert file.

trusted-ca-file:

# Client TLS using generated certificates

auto-tls: false

peer-transport-security:

# Path to the peer server TLS cert file.

cert-file:

# Path to the peer server TLS key file.

key-file:

# Enable peer client cert authentication.

client-cert-auth: false

# Path to the peer server TLS trusted CA cert file.

trusted-ca-file:

# Peer TLS using generated certificates.

auto-tls: false

# Allowed CN for inter peer authentication.

allowed-cn:

# Allowed TLS hostname for inter peer authentication.

allowed-hostname:

# The validity period of the self-signed certificate, the unit is year.

self-signed-cert-validity: 1

# Enable debug-level logging for etcd.

log-level: debug

logger: zap

# Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd.

log-outputs: [stderr]

# Force to create a new one member cluster.

force-new-cluster: false

auto-compaction-mode: periodic

auto-compaction-retention: "1"

# Limit etcd to a specific set of tls cipher suites

cipher-suites: [

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

]

# Limit etcd to specific TLS protocol versions

tls-min-version: 'TLS1.2'

tls-max-version: 'TLS1.3'2.2 节点1使用配置文件启动服务

在/srv/etcd/node目录下创建config和logs目录。

并在config目录下创建配置文件etcd.yaml,其内容如下:

# This is the configuration file for the etcd server.

# Human-readable name for this member.

# 节点名称,需唯一,默认'default',各节点名称需要不一样

name: 'node1'

# Path to the data directory.

# 数据存储目录的路径

data-dir: /srv/etcd/node/data.etcd

# Path to the dedicated wal directory.

# 专用wal目录的路径,默认是在`data-dir`目录下。

# Etcd会将WAL文件写入该目录而不是data-dir。

# 这有助于将WAL文件与其他运行时数据分离,提高数据管理的灵活性。

# 为了不影响之前创建的etcd集群,此处不单独设置。

wal-dir:

# Number of committed transactions to trigger a snapshot to disk.

# 触发快照到磁盘的已提交事务数

snapshot-count: 10000

# Time (in milliseconds) of a heartbeat interval.

# 心跳间隔的时间, 以毫秒为单位。

# leader 多久发送一次心跳到 followers。

heartbeat-interval: 100

# Time (in milliseconds) for an election to timeout.

# 选举超时的时间, 以毫秒为单位。

# 重新投票的超时时间,如果 follow 在该时间间隔没有收到心跳包,会触发重新投票,默认为 1000 ms。

election-timeout: 1000

# Raise alarms when backend size exceeds the given quota. 0 means use the

# default quota.

# 当后端大小超过给定配额时(0默认为低空间配额),引发警报。

quota-backend-bytes: 0

# List of comma separated URLs to listen on for peer traffic.

# 节点间通信监听的 URL 列表,用于集群节点间数据同步

listen-peer-urls: https://192.168.56.121:2380

# List of comma separated URLs to listen on for client traffic.

# 客户端通信监听的 URL 列表,用于接收客户端请求

listen-client-urls: https://192.168.56.121:2379

# Maximum number of snapshot files to retain (0 is unlimited).

# 要保留的最大快照文件数(0表示不受限制)

max-snapshots: 5

# Maximum number of wal files to retain (0 is unlimited).

# 要保留的最大wal文件数(0表示不受限制)

max-wals: 5

# Comma-separated white list of origins for CORS (cross-origin resource sharing).

# 逗号分隔的CORS原始白名单(跨源资源共享)

cors:

# List of this member's peer URLs to advertise to the rest of the cluster.

# The URLs needed to be a comma-separated list.

# 向集群中其他成员宣传的该节点的对等节点URL列表

initial-advertise-peer-urls: https://192.168.56.121:2380

# List of this member's client URLs to advertise to the public.

# The URLs needed to be a comma-separated list.

# 向客户端宣告的访问地址,客户端实际使用的 URL

advertise-client-urls: https://192.168.56.121:2379

# Discovery URL used to bootstrap the cluster.

# 引导群集的发现URL

discovery:

# Valid values include 'exit', 'proxy'

# 发现服务失败时的预期行为(“退出”或“代理”

discovery-fallback: 'proxy'

# HTTP proxy to use for traffic to discovery service.

# 发现服务时使用的HTTP代理

discovery-proxy:

# DNS domain used to bootstrap initial cluster.

# DNS srv域用于引导群集

discovery-srv:

# Comma separated string of initial cluster configuration for bootstrapping.

# Example: initial-cluster: "infra0=http://10.0.1.10:2380,infra1=http://10.0.1.11:2380,infra2=http://10.0.1.12:2380"

# 集群所有节点的名称和 URL 列表,格式为 name1=url1,name2=url2,...

initial-cluster: node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

# Initial cluster token for the etcd cluster during bootstrap.

# 集群唯一标识符,用于区分不同集群,默认值可能冲突,建议自定义(如 etcd-cluster)

initial-cluster-token: 'token-01'

# Initial cluster state ('new' or 'existing').

# 集群初始状态,new 表示新建集群,existing 表示加入已有集群

initial-cluster-state: 'new'

# Reject reconfiguration requests that would cause quorum loss.

# 拒绝可能导致仲裁丢失的重新配置请求

strict-reconfig-check: false

# Enable runtime profiling data via HTTP server

# 通过HTTP服务器启用运行时分析数据。地址位于客户端URL +"/debug/pprof/"

enable-pprof: true

# Valid values include 'on', 'readonly', 'off'

# 代理

proxy: 'off'

# Time (in milliseconds) an endpoint will be held in a failed state.

proxy-failure-wait: 5000

# Time (in milliseconds) of the endpoints refresh interval.

proxy-refresh-interval: 30000

# Time (in milliseconds) for a dial to timeout.

proxy-dial-timeout: 1000

# Time (in milliseconds) for a write to timeout.

proxy-write-timeout: 5000

# Time (in milliseconds) for a read to timeout.

proxy-read-timeout: 0

# 客户端传输安全

client-transport-security:

# Path to the client server TLS cert file.

# 节点服务端证书路径,用于向客户端证明自身身份

cert-file: /etc/etcd/ssl/node1.crt

# Path to the client server TLS key file.

# 节点服务端私钥,与 --cert-file 对应,需严格保护权限 (chmod 600)

key-file: /etc/etcd/ssl/node1.key

# Enable client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the client server TLS trusted CA cert file.

# 客户端证书的信任 CA,用于验证客户端证书的合法性

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Client TLS using generated certificates

# 自动证书

# 不使用自动证书

auto-tls: false

# 节点通信安全

peer-transport-security:

# Path to the peer server TLS cert file.

# 节点间通信的证书

cert-file: /etc/etcd/ssl/node1.crt

# Path to the peer server TLS key file.

# 节点间通信的私钥

key-file: /etc/etcd/ssl/node1.key

# Enable peer client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the peer server TLS trusted CA cert file.

# 节点间通信的信任 CA,用于验证其他节点证书的合法性,通常与客户端 CA 一致(如 /etc/etcd/ssl/ca.crt)

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Peer TLS using generated certificates.

# 自动证书

# 不使用自动证书

auto-tls: false

# Allowed CN for inter peer authentication.

# 允许CommonName进行对等体认证

allowed-cn:

# Allowed TLS hostname for inter peer authentication.

# 允许的TLS证书名称用于对等身份验证

allowed-hostname:

# The validity period of the self-signed certificate, the unit is year.

# 自签名证书有效性,默认1年

self-signed-cert-validity: 1

# Enable debug-level logging for etcd.

# 日志级别,可选值包括 debug, info, warn, error, panic, fatal。建议生产环境使用 info。默认是debug。

log-level: info

# 日志输出方式,zap是一个流行的日志库

logger: zap

# Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd.

# 日志输出,指定日志输出目标,可以是多个,比如default(stderr)、/var/log/etcd.log等。多个目标用逗号分隔。

log-outputs: [stderr, "/srv/etcd/node/logs/etcd.log"]

# Force to create a new one member cluster.

# 强制创建一个新的单成员集群

force-new-cluster: false

auto-compaction-mode: periodic

auto-compaction-retention: "1"

# Limit etcd to a specific set of tls cipher suites

cipher-suites: [

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

]

# Limit etcd to specific TLS protocol versions

tls-min-version: 'TLS1.2'

tls-max-version: 'TLS1.3'然后在/srv/etcd/node目录下面创建启动脚本:

[root@etcd-node1 ~]# cd /srv/etcd/node

[root@etcd-node1 node]# cat start_by_config.sh

#!/bin/bash

cd /srv/etcd/node/logs || exit 1

nohup etcd --config-file=/srv/etcd/node/config/etcd.yaml &

[root@etcd-node1 node]# ll config/

total 8

-rw-r--r-- 1 root root 7711 May 7 22:23 etcd.yaml

[root@etcd-node1 node]#将节点node2和node3按之前的start.sh脚本启动,而node1使用start_by_config.sh 启动。

# 节点1启动etcd

[root@etcd-node1 node]# ./start_by_config.sh

[root@etcd-node1 node]# nohup: appending output to ‘nohup.out’

[root@etcd-node1 node]#

[root@etcd-node1 node]# ps -ef|grep etcd

root 1437 1 1 22:26 pts/0 00:00:01 etcd --config-file=/srv/etcd/node/config/etcd.yaml

[root@etcd-node1 node]#

# 节点2启动etcd

[root@etcd-node2 ~]# cd /srv/etcd/node/

[root@etcd-node2 node]# ./start.sh

CLUSTER:node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

[root@etcd-node2 node]# nohup: appending output to ‘nohup.out’

[root@etcd-node2 node]#

[root@etcd-node2 node]# ps -ef|grep etcd

root 1418 1 0 22:27 pts/0 00:00:05 etcd --data-dir=data.etcd --name node2 --initial-advertise-peer-urls https://192.168.56.122:2380 --listen-peer-urls https://192.168.56.122:2380 --advertise-client-urls https://192.168.56.122:2379 --listen-client-urls https://192.168.56.122:2379 --initial-cluster node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380 --initial-cluster-state new --initial-cluster-token token-01 --client-cert-auth --trusted-ca-file /etc/etcd/ssl/ca.crt --cert-file /etc/etcd/ssl/node2.crt --key-file /etc/etcd/ssl/node2.key --peer-client-cert-auth --peer-trusted-ca-file /etc/etcd/ssl/ca.crt --peer-cert-file /etc/etcd/ssl/node2.crt --peer-key-file /etc/etcd/ssl/node2.key

root 1439 1394 0 22:36 pts/0 00:00:00 grep --color=always etcd

# 节点3启动etcd

[root@etcd-node3 ~]# cd /srv/etcd/node/

[root@etcd-node3 node]# ls

data.etcd start_auto_ssl.sh start.sh

nohup.out start_no_ssl.sh stop.sh

[root@etcd-node3 node]# ./start.sh

CLUSTER:node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

[root@etcd-node3 node]# nohup: appending output to ‘nohup.out’

[root@etcd-node3 node]#

[root@etcd-node3 node]# ps -ef|grep etcd

root 1422 1 0 22:27 pts/0 00:00:06 etcd --data-dir=data.etcd --name node3 --initial-advertise-peer-urls https://192.168.56.123:2380 --listen-peer-urls https://192.168.56.123:2380 --advertise-client-urls https://192.168.56.123:2379 --listen-client-urls https://192.168.56.123:2379 --initial-cluster node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380 --initial-cluster-state new --initial-cluster-token token-01 --client-cert-auth --trusted-ca-file /etc/etcd/ssl/ca.crt --cert-file /etc/etcd/ssl/node3.crt --key-file /etc/etcd/ssl/node3.key --peer-client-cert-auth --peer-trusted-ca-file /etc/etcd/ssl/ca.crt --peer-cert-file /etc/etcd/ssl/node3.crt --peer-key-file /etc/etcd/ssl/node3.key

root 1441 1399 0 22:37 pts/0 00:00:00 grep --color=always etcd

[root@etcd-node3 node]#此时查看集群状态:

[root@etcd-node1 ~]# ecs

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.56.121:2379 | e14cb1abc9daea5b | 3.5.18 | 25 kB | true | false | 18 | 740 | 740 | |

| https://192.168.56.122:2379 | d553b4da699c7263 | 3.5.18 | 25 kB | false | false | 18 | 741 | 741 | |

| https://192.168.56.123:2379 | a7d7b09bf04ad21b | 3.5.18 | 25 kB | false | false | 18 | 742 | 742 | |

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@etcd-node1 ~]#可以看到,集群状态正常,说明节点node1通过配置文件方式启动的etcd服务能正常工作。

校验一下读写:

[root@etcd-node1 ~]# etcdctl --user root --password securePassword get greeting

greeting

Hello, etcd

[root@etcd-node1 ~]# etcdctl --user root --password securePassword put config etcd.yaml

OK

[root@etcd-node1 ~]# etcdctl --user root --password securePassword get config

config

etcd.yaml

[root@etcd-node1 ~]#可以看到读写正常!

2.3 节点2和节点3使用配置文件方式启动etcd

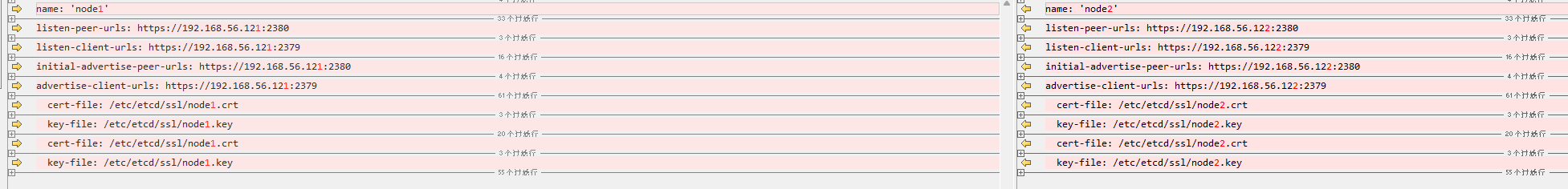

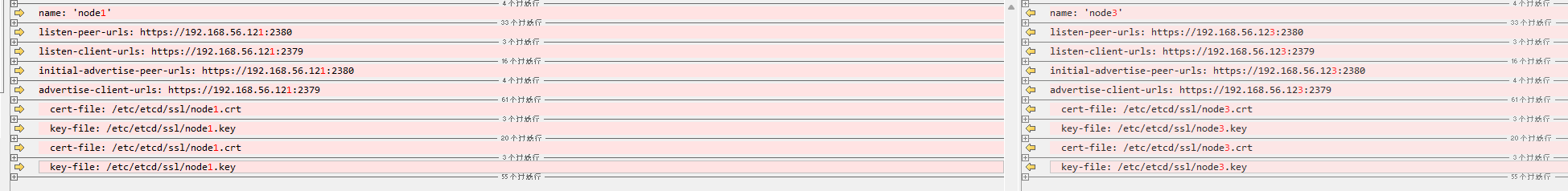

复制一份node1的配置文件etcd.yaml并进行修改,主要修改以下几个部分:

得到节点2和节点3的配置文件,并将配置文件分别上传到这两个节点的/srv/etcd/config目录下。

2.3.1 节点2重启etcd

修改完成的节点2配置文件:

# This is the configuration file for the etcd server.

# Human-readable name for this member.

# 节点名称,需唯一,默认'default',各节点名称需要不一样

name: 'node2'

# Path to the data directory.

# 数据存储目录的路径

data-dir: /srv/etcd/node/data.etcd

# Path to the dedicated wal directory.

# 专用wal目录的路径,默认是在`data-dir`目录下。

# Etcd会将WAL文件写入该目录而不是data-dir。

# 这有助于将WAL文件与其他运行时数据分离,提高数据管理的灵活性。

# 为了不影响之前创建的etcd集群,此处不单独设置。

wal-dir:

# Number of committed transactions to trigger a snapshot to disk.

# 触发快照到磁盘的已提交事务数

snapshot-count: 10000

# Time (in milliseconds) of a heartbeat interval.

# 心跳间隔的时间, 以毫秒为单位。

# leader 多久发送一次心跳到 followers。

heartbeat-interval: 100

# Time (in milliseconds) for an election to timeout.

# 选举超时的时间, 以毫秒为单位。

# 重新投票的超时时间,如果 follow 在该时间间隔没有收到心跳包,会触发重新投票,默认为 1000 ms。

election-timeout: 1000

# Raise alarms when backend size exceeds the given quota. 0 means use the

# default quota.

# 当后端大小超过给定配额时(0默认为低空间配额),引发警报。

quota-backend-bytes: 0

# List of comma separated URLs to listen on for peer traffic.

# 节点间通信监听的 URL 列表,用于集群节点间数据同步

listen-peer-urls: https://192.168.56.122:2380

# List of comma separated URLs to listen on for client traffic.

# 客户端通信监听的 URL 列表,用于接收客户端请求

listen-client-urls: https://192.168.56.122:2379

# Maximum number of snapshot files to retain (0 is unlimited).

# 要保留的最大快照文件数(0表示不受限制)

max-snapshots: 5

# Maximum number of wal files to retain (0 is unlimited).

# 要保留的最大wal文件数(0表示不受限制)

max-wals: 5

# Comma-separated white list of origins for CORS (cross-origin resource sharing).

# 逗号分隔的CORS原始白名单(跨源资源共享)

cors:

# List of this member's peer URLs to advertise to the rest of the cluster.

# The URLs needed to be a comma-separated list.

# 向集群中其他成员宣传的该节点的对等节点URL列表

initial-advertise-peer-urls: https://192.168.56.122:2380

# List of this member's client URLs to advertise to the public.

# The URLs needed to be a comma-separated list.

# 向客户端宣告的访问地址,客户端实际使用的 URL

advertise-client-urls: https://192.168.56.122:2379

# Discovery URL used to bootstrap the cluster.

# 引导群集的发现URL

discovery:

# Valid values include 'exit', 'proxy'

# 发现服务失败时的预期行为(“退出”或“代理”

discovery-fallback: 'proxy'

# HTTP proxy to use for traffic to discovery service.

# 发现服务时使用的HTTP代理

discovery-proxy:

# DNS domain used to bootstrap initial cluster.

# DNS srv域用于引导群集

discovery-srv:

# Comma separated string of initial cluster configuration for bootstrapping.

# Example: initial-cluster: "infra0=http://10.0.1.10:2380,infra1=http://10.0.1.11:2380,infra2=http://10.0.1.12:2380"

# 集群所有节点的名称和 URL 列表,格式为 name1=url1,name2=url2,...

initial-cluster: node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

# Initial cluster token for the etcd cluster during bootstrap.

# 集群唯一标识符,用于区分不同集群,默认值可能冲突,建议自定义(如 etcd-cluster)

initial-cluster-token: 'token-01'

# Initial cluster state ('new' or 'existing').

# 集群初始状态,new 表示新建集群,existing 表示加入已有集群

initial-cluster-state: 'new'

# Reject reconfiguration requests that would cause quorum loss.

# 拒绝可能导致仲裁丢失的重新配置请求

strict-reconfig-check: false

# Enable runtime profiling data via HTTP server

# 通过HTTP服务器启用运行时分析数据。地址位于客户端URL +"/debug/pprof/"

enable-pprof: true

# Valid values include 'on', 'readonly', 'off'

# 代理

proxy: 'off'

# Time (in milliseconds) an endpoint will be held in a failed state.

proxy-failure-wait: 5000

# Time (in milliseconds) of the endpoints refresh interval.

proxy-refresh-interval: 30000

# Time (in milliseconds) for a dial to timeout.

proxy-dial-timeout: 1000

# Time (in milliseconds) for a write to timeout.

proxy-write-timeout: 5000

# Time (in milliseconds) for a read to timeout.

proxy-read-timeout: 0

# 客户端传输安全

client-transport-security:

# Path to the client server TLS cert file.

# 节点服务端证书路径,用于向客户端证明自身身份

cert-file: /etc/etcd/ssl/node2.crt

# Path to the client server TLS key file.

# 节点服务端私钥,与 --cert-file 对应,需严格保护权限 (chmod 600)

key-file: /etc/etcd/ssl/node2.key

# Enable client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the client server TLS trusted CA cert file.

# 客户端证书的信任 CA,用于验证客户端证书的合法性

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Client TLS using generated certificates

# 自动证书

# 不使用自动证书

auto-tls: false

# 节点通信安全

peer-transport-security:

# Path to the peer server TLS cert file.

# 节点间通信的证书

cert-file: /etc/etcd/ssl/node2.crt

# Path to the peer server TLS key file.

# 节点间通信的私钥

key-file: /etc/etcd/ssl/node2.key

# Enable peer client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the peer server TLS trusted CA cert file.

# 节点间通信的信任 CA,用于验证其他节点证书的合法性,通常与客户端 CA 一致(如 /etc/etcd/ssl/ca.crt)

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Peer TLS using generated certificates.

# 自动证书

# 不使用自动证书

auto-tls: false

# Allowed CN for inter peer authentication.

# 允许CommonName进行对等体认证

allowed-cn:

# Allowed TLS hostname for inter peer authentication.

# 允许的TLS证书名称用于对等身份验证

allowed-hostname:

# The validity period of the self-signed certificate, the unit is year.

# 自签名证书有效性,默认1年

self-signed-cert-validity: 1

# Enable debug-level logging for etcd.

# 日志级别,可选值包括 debug, info, warn, error, panic, fatal。建议生产环境使用 info。默认是debug。

log-level: info

# 日志输出方式,zap是一个流行的日志库

logger: zap

# Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd.

# 日志输出,指定日志输出目标,可以是多个,比如default(stderr)、/var/log/etcd.log等。多个目标用逗号分隔。

log-outputs: [stderr, "/srv/etcd/node/logs/etcd.log"]

# Force to create a new one member cluster.

# 强制创建一个新的单成员集群

force-new-cluster: false

auto-compaction-mode: periodic

auto-compaction-retention: "1"

# Limit etcd to a specific set of tls cipher suites

cipher-suites: [

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

]

# Limit etcd to specific TLS protocol versions

tls-min-version: 'TLS1.2'

tls-max-version: 'TLS1.3'确认配置文件已经修改:

[root@etcd-node2 node]# grep node config/etcd.yaml

name: 'node2'

data-dir: /srv/etcd/node/data.etcd

initial-cluster: node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

cert-file: /etc/etcd/ssl/node2.crt

key-file: /etc/etcd/ssl/node2.key

cert-file: /etc/etcd/ssl/node2.crt

key-file: /etc/etcd/ssl/node2.key

log-outputs: [stderr, "/srv/etcd/node/logs/etcd.log"]

[root@etcd-node2 node]# grep '192.168.56.12' config/etcd.yaml

listen-peer-urls: https://192.168.56.122:2380

listen-client-urls: https://192.168.56.122:2379

initial-advertise-peer-urls: https://192.168.56.122:2380

advertise-client-urls: https://192.168.56.122:2379

initial-cluster: node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

[root@etcd-node2 node]#停掉之前的etcd服务:

[root@etcd-node2 node]# ps -ef|grep etcd

root 1418 1 1 22:27 pts/0 00:00:23 etcd --data-dir=data.etcd --name node2 --initial-advertise-peer-urls https://192.168.56.122:2380 --listen-peer-urls https://192.168.56.122:2380 --advertise-client-urls https://192.168.56.122:2379 --listen-client-urls https://192.168.56.122:2379 --initial-cluster node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380 --initial-cluster-state new --initial-cluster-token token-01 --client-cert-auth --trusted-ca-file /etc/etcd/ssl/ca.crt --cert-file /etc/etcd/ssl/node2.crt --key-file /etc/etcd/ssl/node2.key --peer-client-cert-auth --peer-trusted-ca-file /etc/etcd/ssl/ca.crt --peer-cert-file /etc/etcd/ssl/node2.crt --peer-key-file /etc/etcd/ssl/node2.key

root 1514 1394 0 23:05 pts/0 00:00:00 grep --color=always etcd

[root@etcd-node2 node]# ./stop.sh

[root@etcd-node2 node]# ps -ef|grep etcd

root 1523 1394 0 23:05 pts/0 00:00:00 grep --color=always etcd

[root@etcd-node2 node]#使用配置文件方式启动:

[root@etcd-node2 node]# ./start_by_config.sh

[root@etcd-node2 node]# nohup: appending output to ‘nohup.out’

[root@etcd-node2 node]# ps -ef|grep etcd

root 1525 1 3 23:05 pts/0 00:00:00 etcd --config-file=/srv/etcd/node/config/etcd.yaml

root 1533 1394 0 23:05 pts/0 00:00:00 grep --color=always etcd

[root@etcd-node2 node]#可以看到启动成功。

在节点2上面查看一下集群状态:

[root@etcd-node2 ~]# ecs

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.56.121:2379 | e14cb1abc9daea5b | 3.5.18 | 25 kB | true | false | 18 | 766 | 766 | |

| https://192.168.56.122:2379 | d553b4da699c7263 | 3.5.18 | 25 kB | false | false | 18 | 767 | 767 | |

| https://192.168.56.123:2379 | a7d7b09bf04ad21b | 3.5.18 | 25 kB | false | false | 18 | 768 | 768 | |

+-----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@etcd-node2 ~]#可以看到状态也是正常的,再测试一下读写:

[root@etcd-node2 ~]# etcdctl --user root --password securePassword get greeting

greeting

Hello, etcd

[root@etcd-node2 ~]# etcdctl --user root --password securePassword get config

config

etcd.yaml

[root@etcd-node2 ~]# etcdctl --user root --password securePassword put config etcd_node2.yaml

OK

[root@etcd-node2 ~]# etcdctl --user root --password securePassword get config

config

etcd_node2.yaml

[root@etcd-node2 ~]#可以看到,节点2上面测试读写也是正常的!!

2.3.2 节点3重启etcd

修改完成的节点3配置文件:

# This is the configuration file for the etcd server.

# Human-readable name for this member.

# 节点名称,需唯一,默认'default',各节点名称需要不一样

name: 'node3'

# Path to the data directory.

# 数据存储目录的路径

data-dir: /srv/etcd/node/data.etcd

# Path to the dedicated wal directory.

# 专用wal目录的路径,默认是在`data-dir`目录下。

# Etcd会将WAL文件写入该目录而不是data-dir。

# 这有助于将WAL文件与其他运行时数据分离,提高数据管理的灵活性。

# 为了不影响之前创建的etcd集群,此处不单独设置。

wal-dir:

# Number of committed transactions to trigger a snapshot to disk.

# 触发快照到磁盘的已提交事务数

snapshot-count: 10000

# Time (in milliseconds) of a heartbeat interval.

# 心跳间隔的时间, 以毫秒为单位。

# leader 多久发送一次心跳到 followers。

heartbeat-interval: 100

# Time (in milliseconds) for an election to timeout.

# 选举超时的时间, 以毫秒为单位。

# 重新投票的超时时间,如果 follow 在该时间间隔没有收到心跳包,会触发重新投票,默认为 1000 ms。

election-timeout: 1000

# Raise alarms when backend size exceeds the given quota. 0 means use the

# default quota.

# 当后端大小超过给定配额时(0默认为低空间配额),引发警报。

quota-backend-bytes: 0

# List of comma separated URLs to listen on for peer traffic.

# 节点间通信监听的 URL 列表,用于集群节点间数据同步

listen-peer-urls: https://192.168.56.123:2380

# List of comma separated URLs to listen on for client traffic.

# 客户端通信监听的 URL 列表,用于接收客户端请求

listen-client-urls: https://192.168.56.123:2379

# Maximum number of snapshot files to retain (0 is unlimited).

# 要保留的最大快照文件数(0表示不受限制)

max-snapshots: 5

# Maximum number of wal files to retain (0 is unlimited).

# 要保留的最大wal文件数(0表示不受限制)

max-wals: 5

# Comma-separated white list of origins for CORS (cross-origin resource sharing).

# 逗号分隔的CORS原始白名单(跨源资源共享)

cors:

# List of this member's peer URLs to advertise to the rest of the cluster.

# The URLs needed to be a comma-separated list.

# 向集群中其他成员宣传的该节点的对等节点URL列表

initial-advertise-peer-urls: https://192.168.56.123:2380

# List of this member's client URLs to advertise to the public.

# The URLs needed to be a comma-separated list.

# 向客户端宣告的访问地址,客户端实际使用的 URL

advertise-client-urls: https://192.168.56.123:2379

# Discovery URL used to bootstrap the cluster.

# 引导群集的发现URL

discovery:

# Valid values include 'exit', 'proxy'

# 发现服务失败时的预期行为(“退出”或“代理”

discovery-fallback: 'proxy'

# HTTP proxy to use for traffic to discovery service.

# 发现服务时使用的HTTP代理

discovery-proxy:

# DNS domain used to bootstrap initial cluster.

# DNS srv域用于引导群集

discovery-srv:

# Comma separated string of initial cluster configuration for bootstrapping.

# Example: initial-cluster: "infra0=http://10.0.1.10:2380,infra1=http://10.0.1.11:2380,infra2=http://10.0.1.12:2380"

# 集群所有节点的名称和 URL 列表,格式为 name1=url1,name2=url2,...

initial-cluster: node1=https://192.168.56.121:2380,node2=https://192.168.56.122:2380,node3=https://192.168.56.123:2380

# Initial cluster token for the etcd cluster during bootstrap.

# 集群唯一标识符,用于区分不同集群,默认值可能冲突,建议自定义(如 etcd-cluster)

initial-cluster-token: 'token-01'

# Initial cluster state ('new' or 'existing').

# 集群初始状态,new 表示新建集群,existing 表示加入已有集群

initial-cluster-state: 'new'

# Reject reconfiguration requests that would cause quorum loss.

# 拒绝可能导致仲裁丢失的重新配置请求

strict-reconfig-check: false

# Enable runtime profiling data via HTTP server

# 通过HTTP服务器启用运行时分析数据。地址位于客户端URL +"/debug/pprof/"

enable-pprof: true

# Valid values include 'on', 'readonly', 'off'

# 代理

proxy: 'off'

# Time (in milliseconds) an endpoint will be held in a failed state.

proxy-failure-wait: 5000

# Time (in milliseconds) of the endpoints refresh interval.

proxy-refresh-interval: 30000

# Time (in milliseconds) for a dial to timeout.

proxy-dial-timeout: 1000

# Time (in milliseconds) for a write to timeout.

proxy-write-timeout: 5000

# Time (in milliseconds) for a read to timeout.

proxy-read-timeout: 0

# 客户端传输安全

client-transport-security:

# Path to the client server TLS cert file.

# 节点服务端证书路径,用于向客户端证明自身身份

cert-file: /etc/etcd/ssl/node3.crt

# Path to the client server TLS key file.

# 节点服务端私钥,与 --cert-file 对应,需严格保护权限 (chmod 600)

key-file: /etc/etcd/ssl/node3.key

# Enable client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the client server TLS trusted CA cert file.

# 客户端证书的信任 CA,用于验证客户端证书的合法性

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Client TLS using generated certificates

# 自动证书

# 不使用自动证书

auto-tls: false

# 节点通信安全

peer-transport-security:

# Path to the peer server TLS cert file.

# 节点间通信的证书

cert-file: /etc/etcd/ssl/node3.crt

# Path to the peer server TLS key file.

# 节点间通信的私钥

key-file: /etc/etcd/ssl/node3.key

# Enable peer client cert authentication.

# 启用客户端证书认证,要求客户端提供有效证书,需配合 --trusted-ca-file 使用

# 默认是false。

# 由于我们已经开启角色认证,需要改成true

client-cert-auth: true

# Path to the peer server TLS trusted CA cert file.

# 节点间通信的信任 CA,用于验证其他节点证书的合法性,通常与客户端 CA 一致(如 /etc/etcd/ssl/ca.crt)

trusted-ca-file: /etc/etcd/ssl/ca.crt

# Peer TLS using generated certificates.

# 自动证书

# 不使用自动证书

auto-tls: false

# Allowed CN for inter peer authentication.

# 允许CommonName进行对等体认证

allowed-cn:

# Allowed TLS hostname for inter peer authentication.

# 允许的TLS证书名称用于对等身份验证

allowed-hostname:

# The validity period of the self-signed certificate, the unit is year.

# 自签名证书有效性,默认1年

self-signed-cert-validity: 1

# Enable debug-level logging for etcd.

# 日志级别,可选值包括 debug, info, warn, error, panic, fatal。建议生产环境使用 info。默认是debug。

log-level: info

# 日志输出方式,zap是一个流行的日志库

logger: zap

# Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd.

# 日志输出,指定日志输出目标,可以是多个,比如default(stderr)、/var/log/etcd.log等。多个目标用逗号分隔。

log-outputs: [stderr, "/srv/etcd/node/logs/etcd.log"]

# Force to create a new one member cluster.

# 强制创建一个新的单成员集群

force-new-cluster: false

auto-compaction-mode: periodic

auto-compaction-retention: "1"

# Limit etcd to a specific set of tls cipher suites

cipher-suites: [

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

]

# Limit etcd to specific TLS protocol versions

tls-min-version: 'TLS1.2'

tls-max-version: 'TLS1.3'停掉之前的etcd服务,并使用start_by_config.sh脚本启动:

[root@etcd-node3 node]# ps -ef|grep etcd

root 1527 1 1 22:59 pts/0 00:00:06 etcd --config-file=/srv/etcd/node/config/etcd.yaml

root 1569 1399 0 23:09 pts/0 00:00:00 grep --color=always etcd

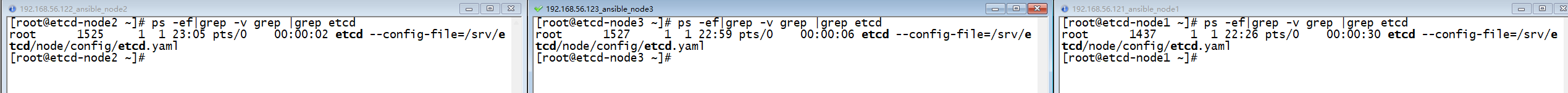

[root@etcd-node3 node]#到此三个节点都使用配置文件方式启动了:

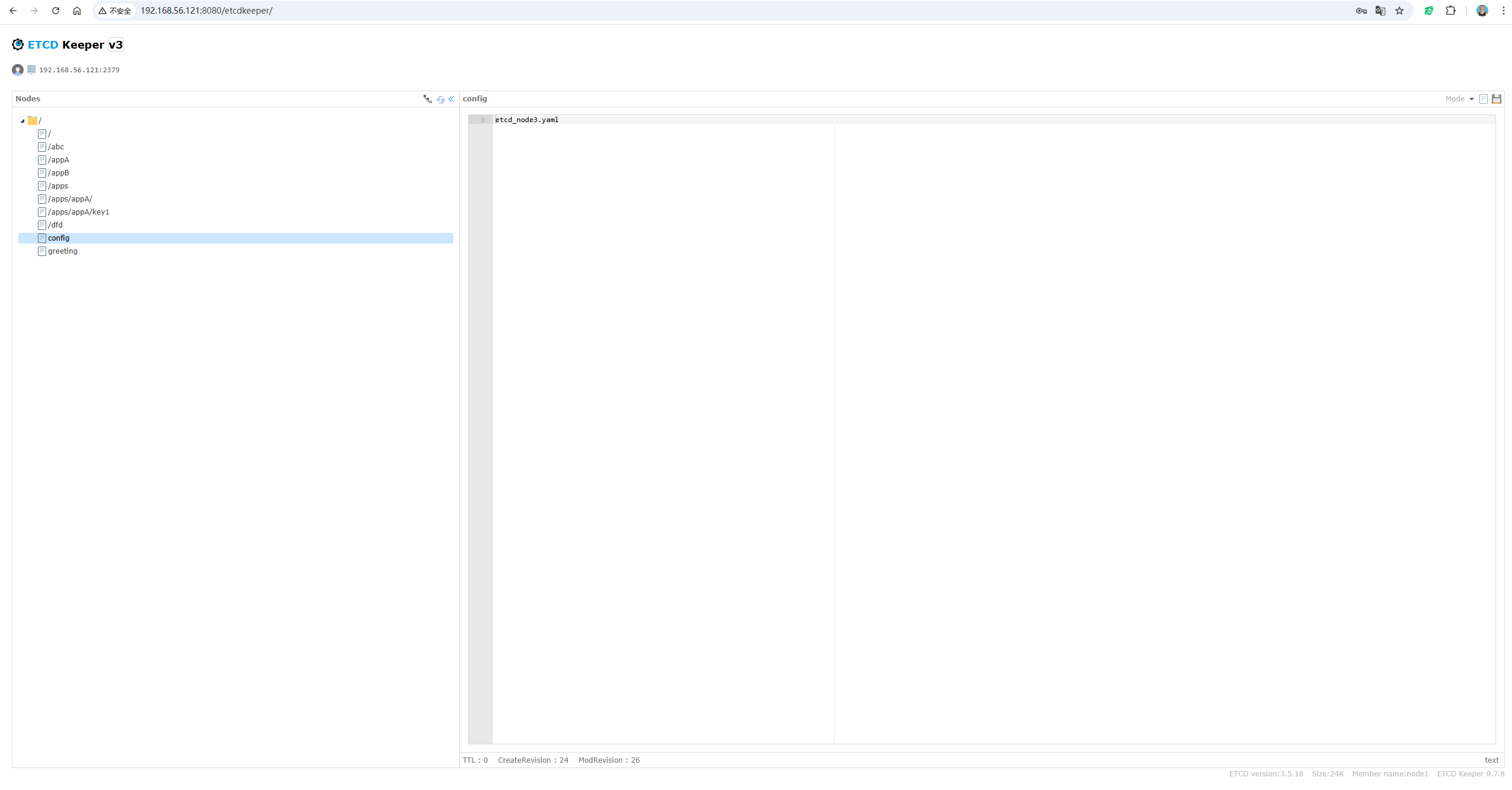

启动etcdkeeper,也可以看到在界面能够正常读取配置。

2.4 通过配置文件启动etcd服务的好处

2.4.1 集中化管理配置

- 统一维护:所有参数集中在一个文件(如

etcd.conf.yml),避免分散在多条命令或环境变量中。 - 版本控制:配置文件可纳入 Git 等版本控制系统,方便追踪变更历史、回滚配置。

- 可读性:结构化文件(YAML/JSON)比长命令行更易阅读和修改。

2.4.2 避免参数冗余和错误

- 减少重复:在集群部署中,多个节点共享同一配置文件,避免重复输入参数。

- 防止遗漏:复杂的参数组合(如 TLS 证书路径、集群节点列表)更容易验证正确性。

2.4.3 支持复杂配置

- 嵌套参数:配置文件支持层级化参数(如监听地址、安全认证、日志配置等),适合复杂的集群配置。

- 多环境适配:通过不同配置文件(

etcd-dev.yml,etcd-prod.yml)快速切换开发、测试、生产环境。

2.4.4 其他优势

- 动态重载:部分配置(如日志级别)支持运行时动态更新,无需重启服务(需 etcd 支持)。注意,生产环境下建议修改配置通过滚动重启,确保集群稳定性。

- 与工具集成:配置管理工具(Ansible、Chef)可直接操作文件,简化自动化部署。

2.5 注意事项

- 文件权限:确保配置文件权限严格(如

600),防止敏感信息(证书路径)泄露。

[root@etcd-node1 ~]# chmod 600 /srv/etcd/node/config/etcd.yaml

[root@etcd-node1 ~]# ll /srv/etcd/node/config/etcd.yaml

-rw------- 1 root root 7711 May 7 22:23 /srv/etcd/node/config/etcd.yaml

[root@etcd-node1 ~]#- 参数覆盖:命令行参数会覆盖配置文件中相同参数的值。

- 兼容性:检查 etcd 版本支持的配置格式(YAML/JSON)。

3. 总结

通过配置文件启动 etcd 是生产环境最佳实践,尤其适合集群部署、安全敏感场景和需要长期维护的系统,能显著提升配置的可靠性、可维护性和可扩展性。